Ghostart: Helping teams act with confidence

When progress depends on founder approval, work slows down.

Client: Ghostart

Challenge:

Work relied on founder or senior leader approval to maintain quality, creating bottlenecks, rewrites and hesitation across the team.

What we did:

Designed a system that helps team members make better decisions independently while staying aligned.

Outcome:

Fewer approvals and rewrites, more confident action from the team and consistent quality without constant oversight.

The broader problem this illustrates

Some things only work when the founder gets involved - but that dependence quickly becomes a bottleneck. In many businesses, this shows up when:

team members hesitate because they’re unsure what ‘good’ looks like

work gets escalated ‘just to be safe’

founders or senior leaders end up reviewing, rewriting or approving everything

The result: progress slows down, senior people stay stuck in the work, and the business becomes harder to run as it grows.

Why content wasn’t the real problem

For years, we ran employee advocacy programmes for organisations including John Lewis & Partners, Post Office, Waitrose, BT, Iceland Foods, River Island and The Big Issue.

The goal was always the same: help employees share content and talk about the business on social media.

But too often the result was the opposite. Content felt corporate. Employees either avoided posting altogether or shared things that didn’t sound like them.

The model itself was broken.

The real problem (not "we need AI")

The issue wasn’t producing more content.

It was that:

Quality lived in a few people’s heads

Standards weren’t visible or shared, so judgement stayed with founders or comms teams.

People didn’t feel confident deciding what to say

Without clarity on what “good” looked like, work stalled or was escalated “just to be safe”.

Templates replaced judgement

What was meant to help people post safely stripped out voice and intent.

Oversight became the safety net

Review and approval filled the gap left by missing confidence.

The result: low participation, generic content, slow turnaround and senior people staying involved far longer than they wanted to.

Before building anything new, we had to fix that.

How we approached it

We followed the same principle we use in all our work: help people act independently without lowering standards.

Captured how people actually think and decide

Instead of asking people to define how they write, we designed exercises that show how they actually think and explain things. This gave us real material to work with - not abstract preferences.

Made quality visible before generation

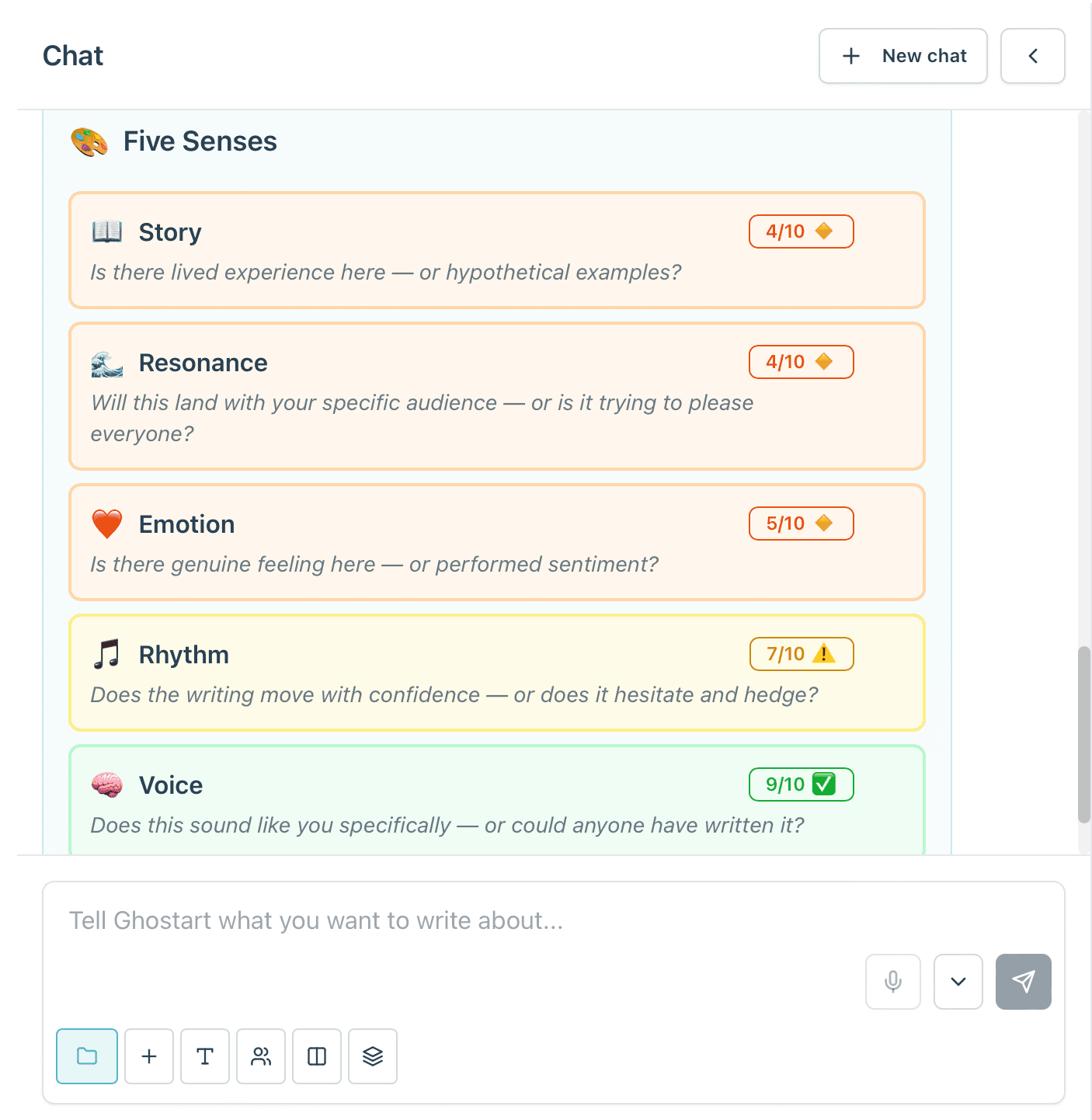

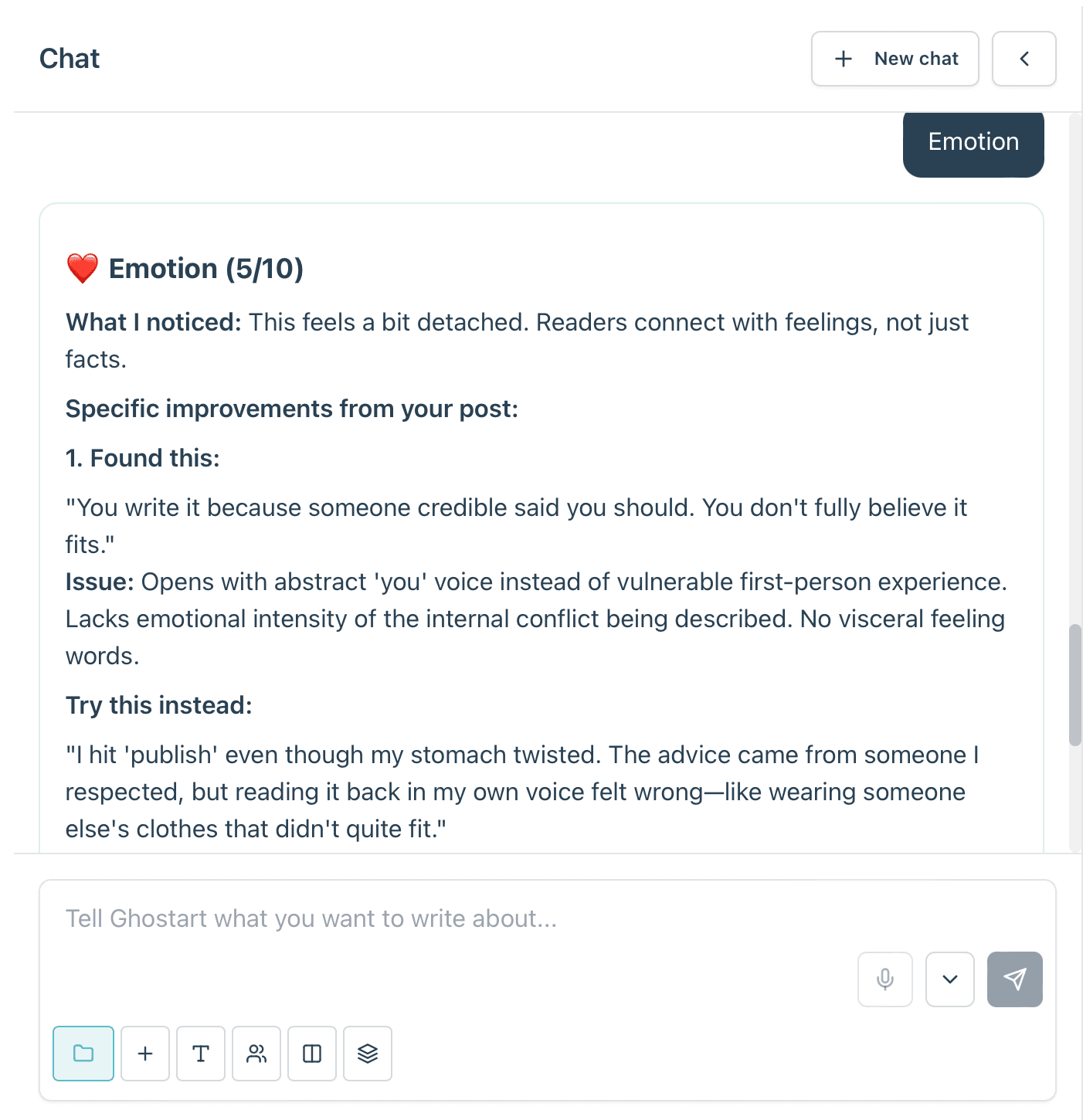

We built the Beige-ometer: a way of assessing how generic something sounds across five dimensions (voice, emotion, rhythm, story, resonance). This meant quality was considered before anything was produced, not corrected afterwards.

Supported decision-making, not just output

When content is generated, it draws on what the user has already shared about how they think and communicate. The system helps people decide what to say and how to say it - rather than guessing for them.

Improved through use, not training

Feedback loops from the Beige-ometer allow people to improve over time. As team members use it, they become clearer and more confident - and the system becomes more useful.

What changed

Posts generated in the first 30 days of beta

Users testing the system independently

Confidence to act without constant escalation

More importantly, the behaviour changed. People who completed the exercises generated content regularly, needed less reassurance, and spent less time second-guessing.

The constraint wasn’t motivation. It was whether the system had given them enough clarity to act.

Why this worked

We didn’t start with "let’s build an AI writing tool."

We started with: "Why does this work only feel safe when senior people are involved - and how do we change that?"

The answer wasn’t more rules, templates or approvals. It was a system that helps people understand what good looks like and make decisions for themselves.

AI supports that system. It doesn’t replace it.

What this proves

Ghostart shows how we approach problems where:

quality matters

decisions are subjective

delegation feels risky

senior people are becoming bottlenecks

By designing systems that support better decisions, we help businesses move faster without losing what makes their work good.

What it looks like in practice

The interface is deliberately simple. Users aren’t wrestling with prompts or settings. They work through short exercises, then generate content that reflects what they’ve already told the system about themselves.

The Beige-ometer makes quality visible before anything is generated.

Resulting content sounds human and requires less review, giving the team more confidence to act without escalation.

The interface helps users articulate their own thoughts, rather than just prompting a bot.

Facing something similar?

If parts of your business still rely on senior people being involved "just to be safe" — and that’s starting to slow things down — we should talk.

We’ll start by understanding where decisions get stuck, not by assuming AI is the answer.